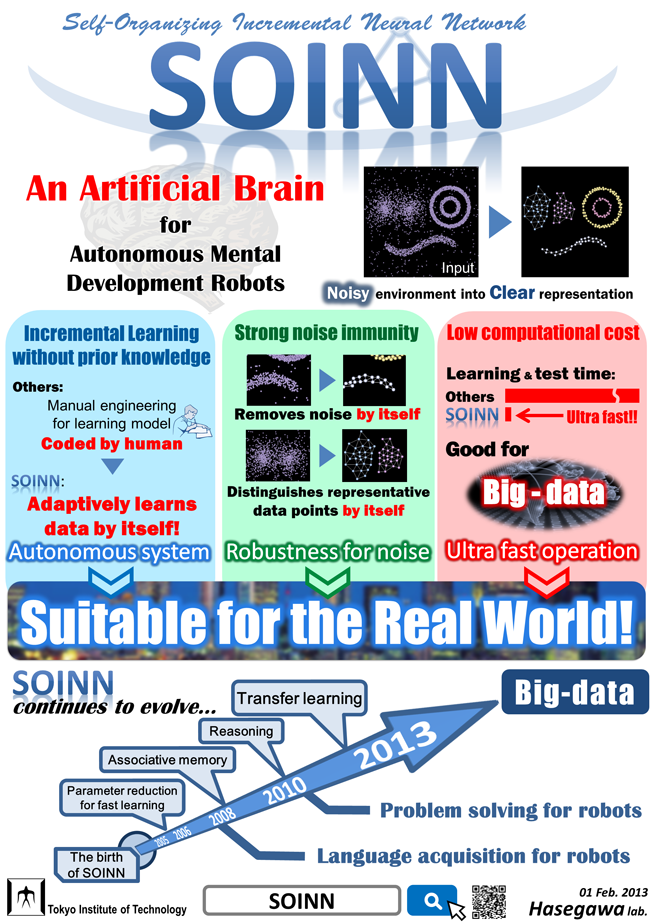

SOINN:Self-Organizing Incremental Neural Network

In this page, we introduce our unsupervised online incremental learning method, Self-Organizing Incremental Neural Network (SOINN).

We will release the 2nd-Generation SOINN, which is designed based on the

Bayes Theory.

What is SOINN?

The SOINN is an unsupervised online-learning method, which is capable of incremental learning, based on Growing Neural Gas (GNG) and Self-Organizing Map (SOM). For online data that is non-stationary and has a complex distribution, it can approximate the distribution of input data and estimate appropriate the number of classes by forming a network in a self-organizing way. In addition, it has the following features: unnecessity to predefine its network structure, high robustness to noise and low computational cost. Thus, the SOINN is especially effective for real-world data processing, and it can be effectively utilized for learning and recognition of patterns such image and sound data, or intelligent robots that run online and in real time in real environment.

Published Papers

- Ph.D Thesis

- Shen Furao, “An Algorithm for Incremental Unsupervised Learning and

Topology Representation”, Ph.D Thesis, Tokyo Institute of Technology,

(2006) [PDF

]

]

- Shen Furao, “An Algorithm for Incremental Unsupervised Learning and

Topology Representation”, Ph.D Thesis, Tokyo Institute of Technology,

(2006) [PDF

- Tutorial of SOINN

- Furao Shen and Osamu Hasegawa, "Self-organizing Incremental Neural

Network and its Applications", Tutorial, International Joint Conference

on Neural Networks (IJCNN 2009) [slide

]

ABSTRACT

]

ABSTRACT

What is SOINN? The SOINN is an unsupervised online-learning method, which is capable of incremental learning, based on Growing Neural Gas (GNG) and Self-Organizing Map (SOM). For online data that is non-stationary and has a complex distribution, it can approximate the distribution of input data and estimate appropriate the number of classes by forming a network in a self-organizing way. In addition, it has the following features: unnecessity to predefine its network structure and high robustness to noise. Thus, we consider the SOINN to be a very efficient method for real-world applications.

- Furao Shen and Osamu Hasegawa, "Self-organizing Incremental Neural

Network and its Applications", Tutorial, International Joint Conference

on Neural Networks (IJCNN 2009) [slide

- Original SOINN

- Furao Shen and Osamu Hasegawa, "An Incremental Network for On-line Unsupervised Classification and

Topology Learning"

, Neural Networks, Vol.19, No.1, pp.90-106, (2006) [PDF

, Neural Networks, Vol.19, No.1, pp.90-106, (2006) [PDF ]

ABSTRACT

]

ABSTRACT

This paper presents an on-line unsupervised learning mechanism for unlabeled data that are polluted by noise. Using a similarity thresholdbased and a local error-based insertion criterion, the system is able to grow incrementally and to accommodate input patterns of on-line nonstationary data distribution. A definition of a utility parameter, the error-radius, allows this system to learn the number of nodes needed to solve a task. The use of a new technique for removing nodes in low probability density regions can separate clusters with low-density overlaps and dynamically eliminate noise in the input data. The design of two-layer neural network enables this system to represent the topological structure of unsupervised on-line data, report the reasonable number of clusters, and give typical prototype patterns of every cluster without prior conditions such as a suitable number of nodes or a good initial codebook.

- Furao Shen and Osamu Hasegawa, "An Incremental Network for On-line Unsupervised Classification and

Topology Learning"

- E-SOINN

- Furao Shen, Tomotaka Ogura and Osamu Hasegawa, "An enhanced self-organizing incremental neural network for online

unsupervised learning"

, Neural Networks, Vol.20, No.8, pp.893-903, (2007)

ABSTRACT

, Neural Networks, Vol.20, No.8, pp.893-903, (2007)

ABSTRACT

An enhanced self-organizing incremental neural network (ESOINN) is proposed to accomplish online unsupervised learning tasks. It improves the self-organizing incremental neural network (SOINN) [Shen, F., Hasegawa, O. (2006a). An incremental network for on-line unsupervised classification and topology learning. Neural Networks, 19, 90–106] in the following respects: (1) it adopts a single-layer network to take the place of the two-layer network structure of SOINN; (2) it separates clusters with high-density overlap; (3) it uses fewer parameters than SOINN; and (4) it is more stable than SOINN. The experiments for both the artificial dataset and the real-world dataset also show that ESOINN works better than SOINN.

- Furao Shen, Tomotaka Ogura and Osamu Hasegawa, "An enhanced self-organizing incremental neural network for online

unsupervised learning"

- SOINN-NN (Nearest Neighbor)

- Furao Shen and Osamu Hasegawa, "A Fast Nearest Neighbor Classifier Based on Self-organizing Incremental

Neural Network"

, Neural Networks, Vol.21, No.10, pp1537-1547, (2008)

ABSTRACT

, Neural Networks, Vol.21, No.10, pp1537-1547, (2008)

ABSTRACT

A fast prototype-based nearest neighbor classifier is introduced. The proposed Adjusted SOINN Classifier (ASC) is based on SOINN (self-organizing incremental neural network), it automatically learns the number of prototypes needed to determine the decision boundary, and learns new information without destroying old learned information. It is robust to noisy training data, and it realizes very fast classification. In the experiment, we use some artificial datasets and real-world datasets to illustrate ASC. We also compare ASC with other prototype-based classifiers with regard to its classification error, compression ratio, and speed up ratio. The results show that ASC has the best performance and it is a very efficient classifier.

- Furao Shen and Osamu Hasegawa, "A Fast Nearest Neighbor Classifier Based on Self-organizing Incremental

Neural Network"

Videos

In HasegawaLab's Channel on YouTube ![]() , many ohter videos of our researches are broadcasted.

, many ohter videos of our researches are broadcasted.

- SOINN Demo Applet